The Neuroscience Behind Vision, Photography, and Cameras

Given that almost all of us walk around with a highly capable, high resolution camera in our pockets, it seems generally safe to assume that we have all probably taken at least one picture in our lifetimes. For better or for worse, cell phone cameras have dramatically transformed the field of photography. It is not known whether this transformation has elevated photography as an art form, but it is virtually incontrovertible that photography is now more popular and accessible than ever before.

With this dramatic increase in the number of people taking and editing pictures, it is no surprise companies like Instagram, Twitter, and VSCO have evolved to capitalize on this phenomenon, offering all-in-one platforms to help you take, edit, and ultimately share your pictures. While much internet space has been devoted to what photo gear to buy and how and where to take the best selfie, comparatively less attention has been paid to attempting to understand what photographers think about, or should think about, when they decide to take a picture.

As both a neuroscientist and a freelance photographer, Adam Brocket, PhD, finds this question endlessly fascinating. Across three guest posts, Dr. Brockett focuses on the ways in which the neuroscience of visual perception impacts our appreciation of photography. These pieces help uncover how the brain sees an image, what it detects, and ultimately how to utilize the neuroscience of perception to take better photos. Here in post 1, he discusses the neuroscience of vision, the science of cameras, and the fundamental differences between eyes and cameras.

How Cameras and the Human Eye Help Us Interpret the Environment

It may seem obvious, but just in case…cameras are not eyes. Cameras are portable, self-contained devices consisting of a lens, a shutter mechanism, and some sort of medium (i.e., film or digital sensor) on which to record an image. The eye, on the other hand, is largely a mass of tissue filled with fluid that is permanently stuck in our heads. A camera is made from metal and plastic, and an eye is entirely composed of cells. Even beyond these physical differences, the purpose of a camera, at least with respect to photography, is far different from that of the eye.

A camera’s main purpose is to reproduce a scene with high fidelity, whereas our eyes do not make static images at all, but instead interpret our environment through a constant stream of snapshots. Nevertheless, both allow us to see and understand our world; understanding how each work is essential to understanding what a photo is.

Whether you are talking about a cell phone camera or a top-of-the-line digital camera, all digital cameras capture images in more or less the same way. Just behind the lens sits a small sensor composed of light capturing units called pixels. These pixels are organized into orderly columns that are overlaid with a filter, allowing them the ability to convert light into color.

While pixel size, density, and orientation are the typical features over which camera manufacturers compete for consumer attention (and do have their place in determining the exact quality of what a camera produces), in the end, the sensor of any camera is ultimately a highly optimized piece of technology meant to produce a fairly accurate static image of the world.

Today, most consumer cameras contain a complementary metal-oxide semiconductor (CMOS) sensor. These sensors contain arrays of photosites or pixels that try to take in as much light as possible for as long as the shutter of the camera is held open. On top of these photosites is typically a filter, known as a Bayer filter, that allows each photosite to selectively take up color information about one type of color, namely red, blue, or green.

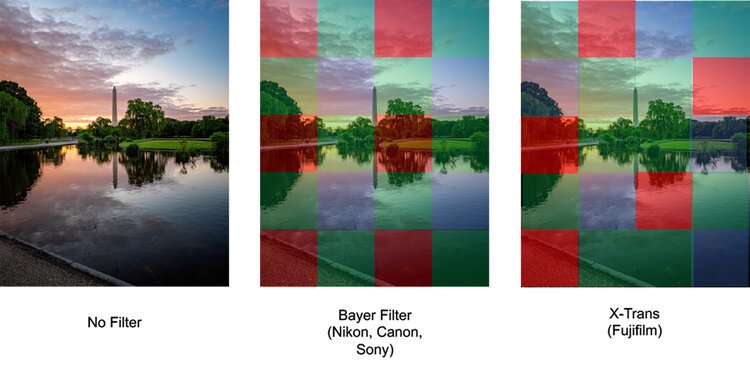

As you can see in the figure below, camera sensors tend to adopt one of two configurations. The standard Bayer filter array, seen in most cell phones, Nikon, Sony, and Canon cameras, orders pixels in uniformly repeating patterns of red, blue, and green. Fujifilm by contrast has adopted a proprietary configuration known as X-Trans and orders pixels in a slightly different configuration that they say produces better image quality. Regardless of the type of configuration a manufacturer adopts, before an image can be produced, some sort of processing must occur. Processing must occur because each pixel only occupies a small unit of image space and can only report on the amount of red, blue, or green light, respectively, in that space to the camera’s processors.

The magic of digital photography is that processors in the camera then make educated guesses about what the other colors at each photosite might be based on the amount of red, blue, or green light reported. This guesswork is not perfect but has been optimized to the point that it is exceedingly difficult for the average person to notice any real difference in the blue reported by a red pixel versus the blue reported by a blue pixel.

The Neuroscience of Color Vision / Photo by Adam Brockett

How Camera Sensors Compare to the Neuroanatomy of the Human Eye

Much like the camera, the back of the eye, which sits behind the lens of the eye, is lined with a sheet of light sensitive cells known as the retina. The retina is composed of several layers of different cell types, the most superficial of which are known as photoreceptors. Generally speaking, the human retina consists of two types of photoreceptors: rods, which help us see light and dark, and cones, which help us see color. At this point, it might sound like a camera sensor and an eye’s retina are basically the same thing, but this is where the similarity between the two ends.

Unlike the camera sensor and its repeating columns of evenly spaced red, blue, and green pixels, rods and cones are not uniformly distributed across the surface of the retina. Cones tend to be clustered in high numbers around an area of the retina known as the fovea. The fovea is a specialized part of the retina that is responsible for providing our brains with the clearest, most high-resolution pictures of our visual environment. While areas surrounding the fovea also have cones, they are much less concentrated and, together with the rods, produce an image that is comparatively blurrier and lower resolution.

While the camera might be capable of making accurate guesses about color, this level of processing is nothing compared to what the eye can do. Remember that photoreceptors are not organized uniformly, and so already our brains must make sense of a visual scene that is being projected in blurry and high-resolution forms piece by piece, and technically upside down, at the same time. How does the brain do this? The eye partially solves this problem by sampling multiple high-resolution snapshots from a visual scene. Muscles in our neck, head, and surrounding our eyes help to keep the meaningful parts of a visual scene (i.e., traffic lights, the person talking to you, etc.) fixated on the fovea.

The rods and cones take in light and color information and transmit this information through a web of cells each tasked with tracking certain features of the visual environment. Importantly, groups of photoreceptors send information to individual cells at the back of the retina known as the retinal ganglion cells. Each retinal ganglion cell is in turn interested in different combinations of features (light intensity, contrast, color, location, etc.), which then passes along to the visual cortex of our brains.

Because our eyes don’t specialize in creating static images, cells in our retina are often interested in features in our visual environment other than color and light. The movement of objects around us is often just as important as color and light information. Movement information is first detected by our peripheral vision (i.e., vision outside the fovea) and can trigger sudden adjustments in eye position, called saccades, which help align the moving object with our fovea. This in turn allows us to better assess what is going on and how to react.

There are lots of great resources that discuss how the eye works in more detail (Maslund, Richard, 2020; Snowden et al., 2012), but for now it is important to remember that our eyes are not simply camera sensors. Our brains are constantly receiving multiple pieces of information about our visual world. Much like a puzzle, our brains must sort through this information to understand what it is we’re looking at. This constant processing and reprocessing of visual information helps ensure that we have a good understanding of what is going on in any given visual scene. However, what we take away from that visual scene and how accurately we represent this information is very different from how a camera captures a scene. In the next section, we will explore some of the parts of a photograph/visual scene that our brains are most interested in.

Photo by Adam Brockett

About the author

Dr. Adam Brockett is an NRSA funded post-doctoral fellow working in the lab of Dr. Matthew Roesch at the University of Maryland, College Park. Prior to joining the Roesch Lab, Adam received his PhD in Psychology and Neuroscience from Princeton University under the mentorship of Professor Elizabeth Gould. Adam’s research explores the intersection of experience and behavior, primarily focusing on how neurons and glia in the frontal areas of our brain support decision-making across the lifespan, and what happens when these processes go awry. When not in the lab, Adam works as a freelance photographer in the DC area, amassing over 11,000 followers on his Instagram. Adam specializes mostly in landscape and architectural photography and has had work featured by Southern Living, The Washington Post, and Delaware Today.

References for The Neuroscience and Psychology of Attention and it’s Impact in Photography

Bosco, A., Lappe, M., & Fattori, P. (2015). Adaptation of Saccades and Perceived Size after Trans-Saccadic Changes of Object Size. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 35(43), 14448–14456. https://doi.org/10.1523/JNEUROSCI.0129-15.2015

Brockett, A. T., Pribut, H. J., Vazquez, D., & Roesch, M. R. (2018). The impact of drugs of abuse on executive function: Characterizing long-term changes in neural correlates following chronic drug exposure and withdrawal in rats. Learning & Memory (Cold Spring Harbor, N.Y.), 25(9), 461–473. https://doi.org/10.1101/lm.047001.117

Brockett, A. T., & Roesch, M. R. (2020). The ever-changing OFC landscape: What neural signals in OFC can tell us about inhibitory control. Behavioral Neuroscience. https://doi.org/10.1037/bne0000412

Brockett, A. T., Vázquez, D., & Roesch, M. R. (2021). Prediction errors and valence: From single units to multidimensional encoding in the amygdala. Behavioral Brain Research, 113176. https://doi.org/10.1016/j.bbr.2021.113176

Canon USA. (2015). Canon—Obsession Experiment.

Farroni, T., Johnson, M. H., Menon, E., Zulian, L., Faraguna, D., & Csibra, G. (2005). Newborns’ preference for face-relevant stimuli: Effects of contrast polarity. Proceedings of the National Academy of Sciences of the United States of America, 102(47), 17245–17250. https://doi.org/10.1073/pnas.0502205102

Gershoni, S., & Kobayashi, H. (2006). How We Look at Photographs as Indicated by Contrast Discrimination Performance Versus Contrast Preference. Journal of Imaging Science and Technology, 50(4), 320–326. https://doi.org/10.2352/J. ImagingSci.Technol.(2006)50:4(320)

Henkel, L. A. (2014). Point-and-shoot memories: The influence of taking photos on memory for a museum tour. Psychological Science, 25(2), 396–402. https://doi.org/10.1177/0956797613504438

Ikeda, T., Matsuyoshi, D., Sawamoto, N., Fukuyama, H., & Osaka, N. (2015). Color harmony represented by activity in the medial orbitofrontal cortex and amygdala. Frontiers in Human Neuroscience, 9. https://doi.org/10.3389/fnhum.2015.00382

Ishizu, T., & Zeki, S. (2011). Toward a brain-based theory of beauty. PloS One, 6(7), e21852. https://doi.org/10.1371/journal.pone.0021852

Jain, A., Bansal, R., Kumar, A., & Singh, K. (2015). A comparative study of visual and auditory reaction times on the basis of gender and physical activity levels of medical first-year students. International Journal of Applied and Basic Medical Research, 5(2), 124–127. https://doi.org/10.4103/2229-516X.157168

Jones, B. C., DeBruine, L. M., & Little, A. C. (2007). The role of symmetry in attraction to average faces. Perception & Psychophysics, 69(8), 1273–1277. https://doi.org/10.3758/bf03192944

Lu, Z., Guo, B., Boguslavsky, A., Cappiello, M., Zhang, W., & Meng, M. (2015). Distinct effects of contrast and color on subjective rating of fearfulness. Frontiers in Psychology, 6, 1521. https://doi.org/10.3389/fpsyg.2015.01521

Maslund, Richard. (2020). We know it when we see it: What the neurobiology of vision tells us about how we think (1st ed.). Basic Books.

Rouhani, N., Norman, K. A., Niv, Y., & Bornstein, A. M. (n.d.). Reward prediction errors create event boundaries in memory. 38.

Sawh, Kishore. (2015). Canon’s “Obsession Experiment” | See How The Average Person vs Pro Views Image Details. https://www.slrlounge.com/canons-obsession-experiment-see-average-person-vs-pro-views-image-details/

Snowden, R., Snowden, R. J., Thompson, P., & Troscianko, T. (2012). Basic Vision: An Introduction to Visual Perception. OUP Oxford.

Tinio, P. P. L., Leder, H., & Strasser, M. (2011). Image quality and the aesthetic judgment of photographs: Contrast, sharpness, and grain teased apart and put together. Psychology of Aesthetics, Creativity, and the Arts, 5(2), 165–176. https://doi.org/10.1037/a0019542

Valentine, T., Darling, S., & Donnelly, M. (2004). Why are average faces attractive? The effect of view and averageness on the attractiveness of female faces. Psychonomic Bulletin & Review, 11(3), 482–487. https://doi.org/10.3758/bf03196599

Zeki, S. (1998). Art and the Brain. Daedalus, 127(2), 71–103.